‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity

‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity

‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity

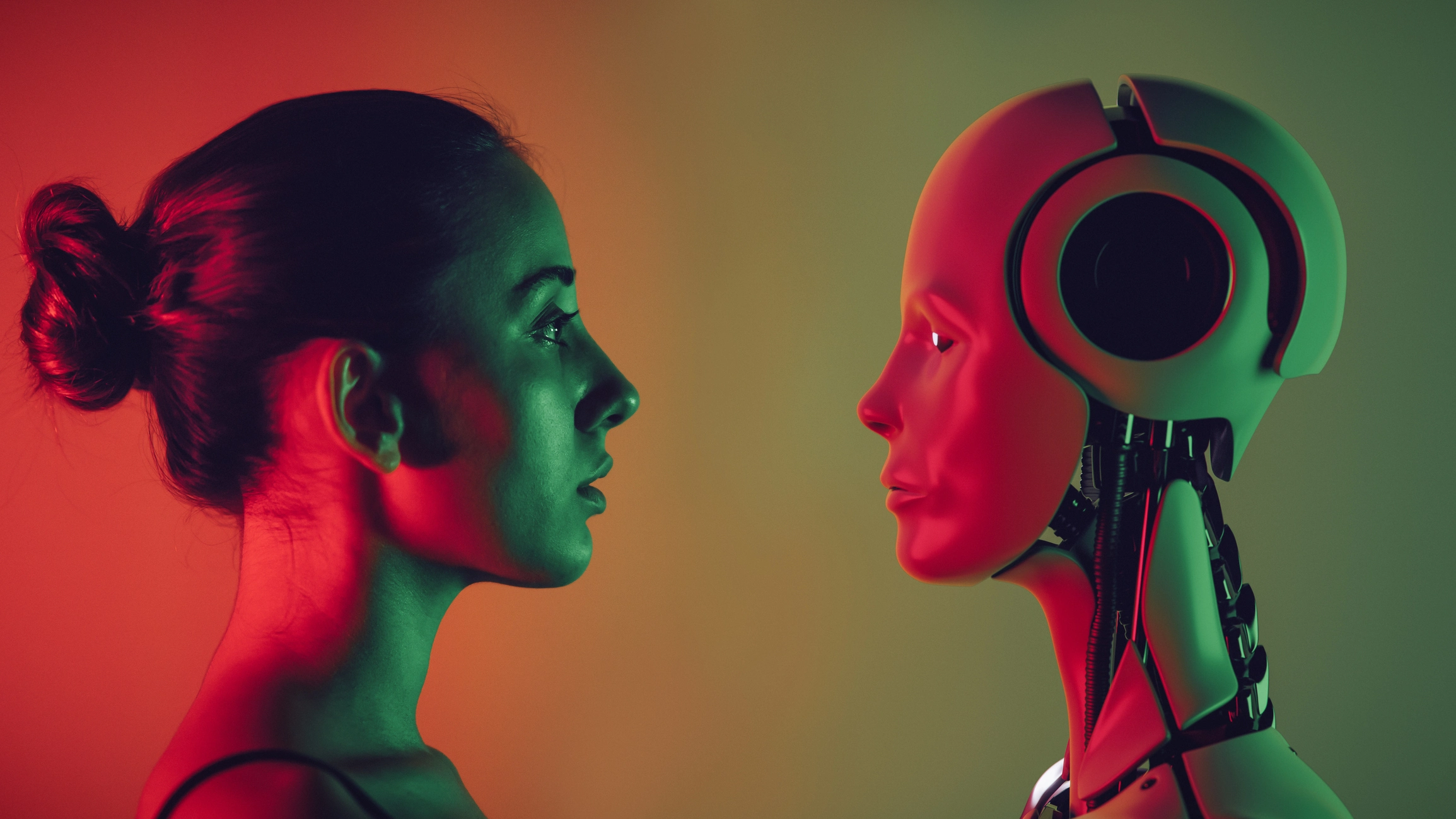

‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity::It’s part of a worrying trend of non-consensual “deepfake” pornography being developed and distributed because of advances in artificial intelligence.