The thing is, for the next 3-5 years, talent holds all the cards here.

It will eventually flip such that the 20 of the 80/20 of a key character performance can be fully automated, but that's years away.

Until then, studios that want high quality AI generated performances are going to need to be working intimately with the talent that can produce the baseline to scale out from.

And the whole job loss thing is honestly overblown. Of course there's going to be companies chasing short term profits in exchange for long term consequences, but the vast majority of those are going to continue to blow up in their faces.

In reality, rather than labor demand staying constant as supply increases with synthetic labor, what's going to happen is that labor demand spikes rapidly as supply increases.

You won't see a game with a 40 person writing team reducing staff to 4 people to produce the same scope of game, you'll see a 40 person writing team working to create a generative AI pipeline that enables an order of magnitude increase in world detail and scope.

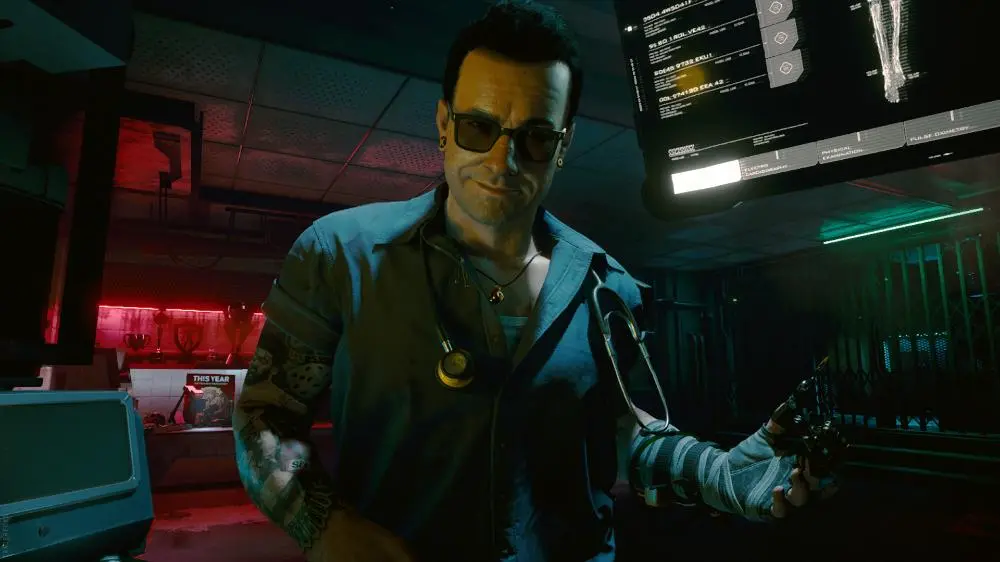

It's almost painful playing games these days seeing the gap between what's here today and what's right around the bend. Things like Cyberpunk 2077 having incredibly detailed world assets, dialogue, and interiors in the places the main and side quests take you, but NPCs on the sidewalk that all say the same things in voices that don't even match the models, and most buildings off the beaten path being inaccessible.

Think of just how much work went into RDR2 having each NPC have unique-ish responses to a single round of dialogue from the PC, and how much of a difference that made to immersion but still only surface deep.

Rather than "let's fire everyone and keep making games the same way we did before" staffing is going to continue to be around the same, but you'll see indie teams able to produce games closer to bigger studios today and big studios making games that would be unthinkable just a few years ago.

The bar adjusts as technology advances. It doesn't remain the same.

Yes, large companies are always going to try to pinch every penny. But the difference between a full voice synthesis performance over the next few years that skirts unions and a performer-integrated generation platform that's tailored to the specific characters being represented is going to be night and day, and audiences/reviewers aren't going to react well to cut corners as long as flagship examples of it being done right are being released too.

The fearmongering is being blown out of proportion, and at a certain point it actually becomes counterproductive, as if too many within a given sector buy into the BS such that they simply become obtusely contrarian to progress rather than adaptive, you'll see the same shooting themselves in the foot as the RIAA/MPAA years ago fighting tooth and nail to prevent digital distribution, leaving the door open to 3rd parties to seize the future, rather than building and adapting into the future themselves (which would have been the most profitable approach in retrospect).