Breast Cancer

Breast Cancer

Breast Cancer

You're viewing a single thread.

No link or anything, very believable.

You could participate or complain.

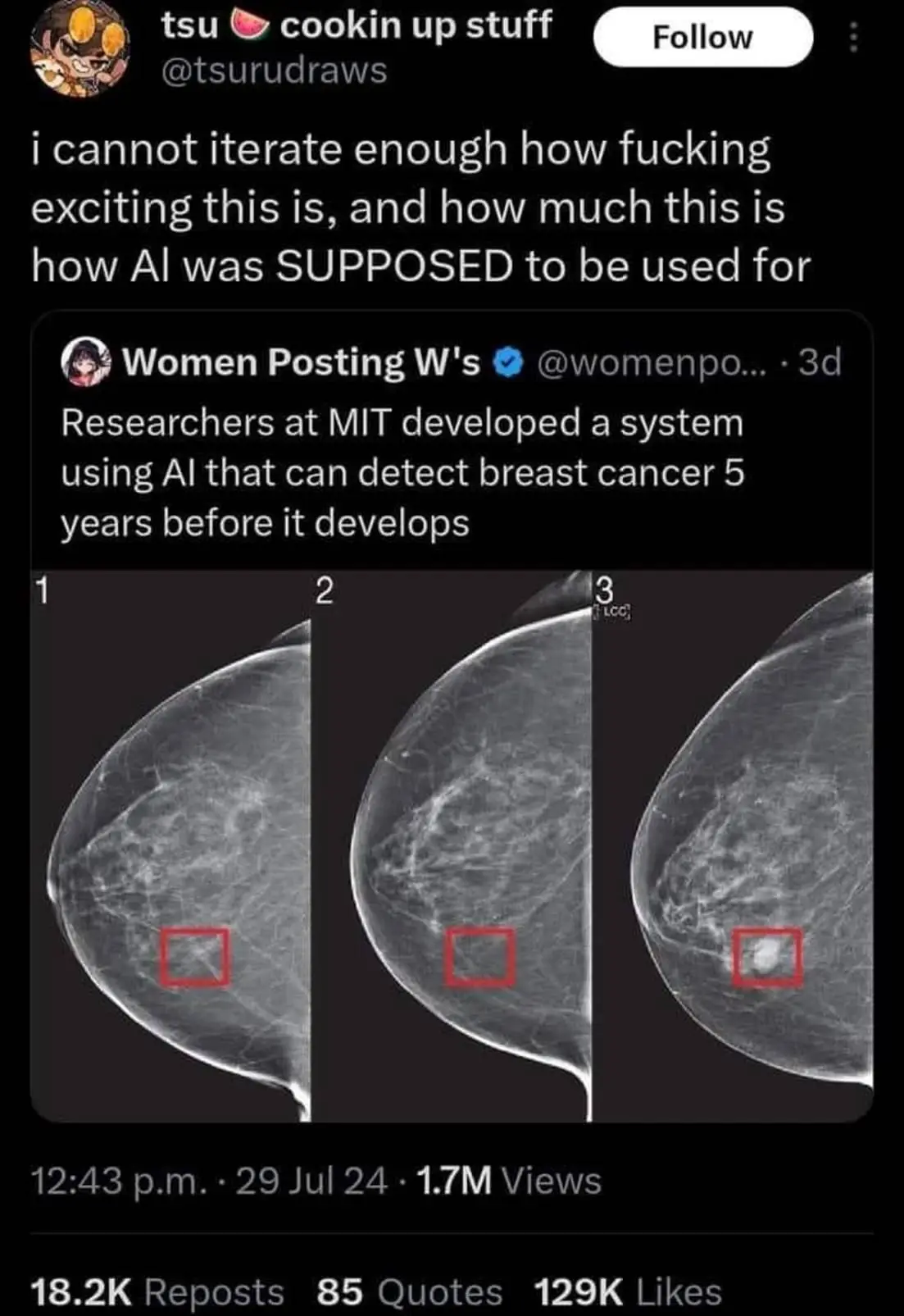

https://news.mit.edu/2019/using-ai-predict-breast-cancer-and-personalize-care-0507

Complain to who? Some random twitter account? WHy would I do that?

No, here. You could asked for a link or Google.

I am commenting on this tweet being trash, because it doesn't have a link in it.

Honestly this is a pretty good use case for LLMs and I've seen them used very successfully to detect infection in samples for various neglected tropical diseases. This literally is what AI should be used for.

Sure, agreed . Too bad 99% of it's use is still stealing from society to make a few billionaires richer.

I also agree.

However these medical LLMs have been around for a long time, and don't use horrific amounts of energy, not do they make billionaires richer. They are the sorts of things that a hobbiest can put together provided they have enough training data. Further to that they can run offline, allowing doctors to perform tests in the field, as I can attest to witnessing first hand with soil transmitted helminths surveys in Mozambique. That means that instead of checking thousands of stool samples manually, those same people can be paid to collect more samples or distribute the drugs to cure the disease in affected populations.

Worth noting the type of comment this is in response to is arguing that home users should be legally forbidden from accessing training data and want a world where only the richest companies can afford to license training data (which will be owned by their other rich friends thanks to ig being posted on their sites)

Supporting heavy copywrite extensions is the dumbest position anyone could have .

I highly doubt the medical data to do these are available to a hobbyist, or that someone like that would have the know-how to train the AI.

But yea, rare non-bad use of AI. Now we just need to eat the rich to make it a good for humanity. Let's get to that I say!

Actually the datasets for this MDA stuff are widely available.

You don't understand how they work and that's fine, you're upset based on your paranoid guesswork thats filled in the lack of understanding and that's sad.

No one is stealing from society, 'society' isn't being deprived of anything when ai looks at an image. The research is pretty open, humanity is benefitting from it in the same way Tesla, Westi ghouse and Edison benefitted the history of electrical research.

And yes I'd you're about to tell me Edison did nothing but steal then this is another bit of tech history you've not paid attention to beyond memes.

The big companies you hate like meta or nvidia are producing papers that explain methods, you can follow along at home and make your own model - though with those examples you don't need to because they've released models on open licenses. Ironically it seems likely you don't understand how this all works or what's happening because zuck is doing significantly more to help society than you are - Ironic, hu?

And before you tell me about zuck doing genocide or other childish arguments, we're on lemmy which was purposefully designed to remove the power from a top down authority so if an instance pushed for genocide we would have zero power to stop it - the report you're no doubt going go allude to says that Facebook is culpable because it did not have adequate systems in place to control locally run groups...

I could make good arguments against zuck, I don't think anyone should be able to be that rich but it's funny to me when a group freely shares pytorch and other key tools used to help do things like detect cancer cheaply and efficient, help impoverished communities access education and health resources in their local language, help blind people have independence, etc, etc, all the many positive uses for ai - but you shit on it all simply because you're too lazy and selfish to actually do anything materially constructive to help anyone or anything that doesn't directly benefit you.

LLMs do language, not images.

These models aren't LLM based.