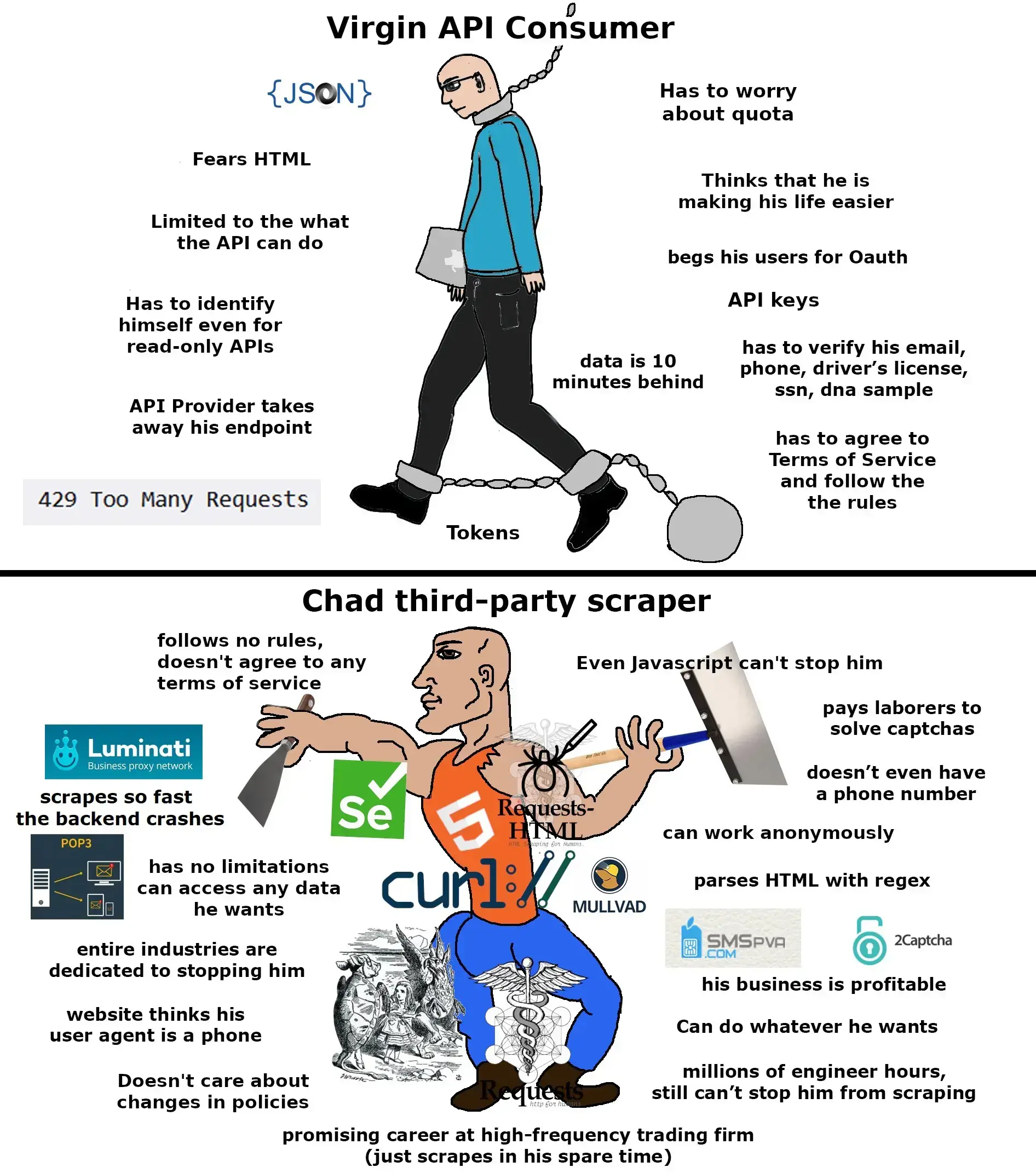

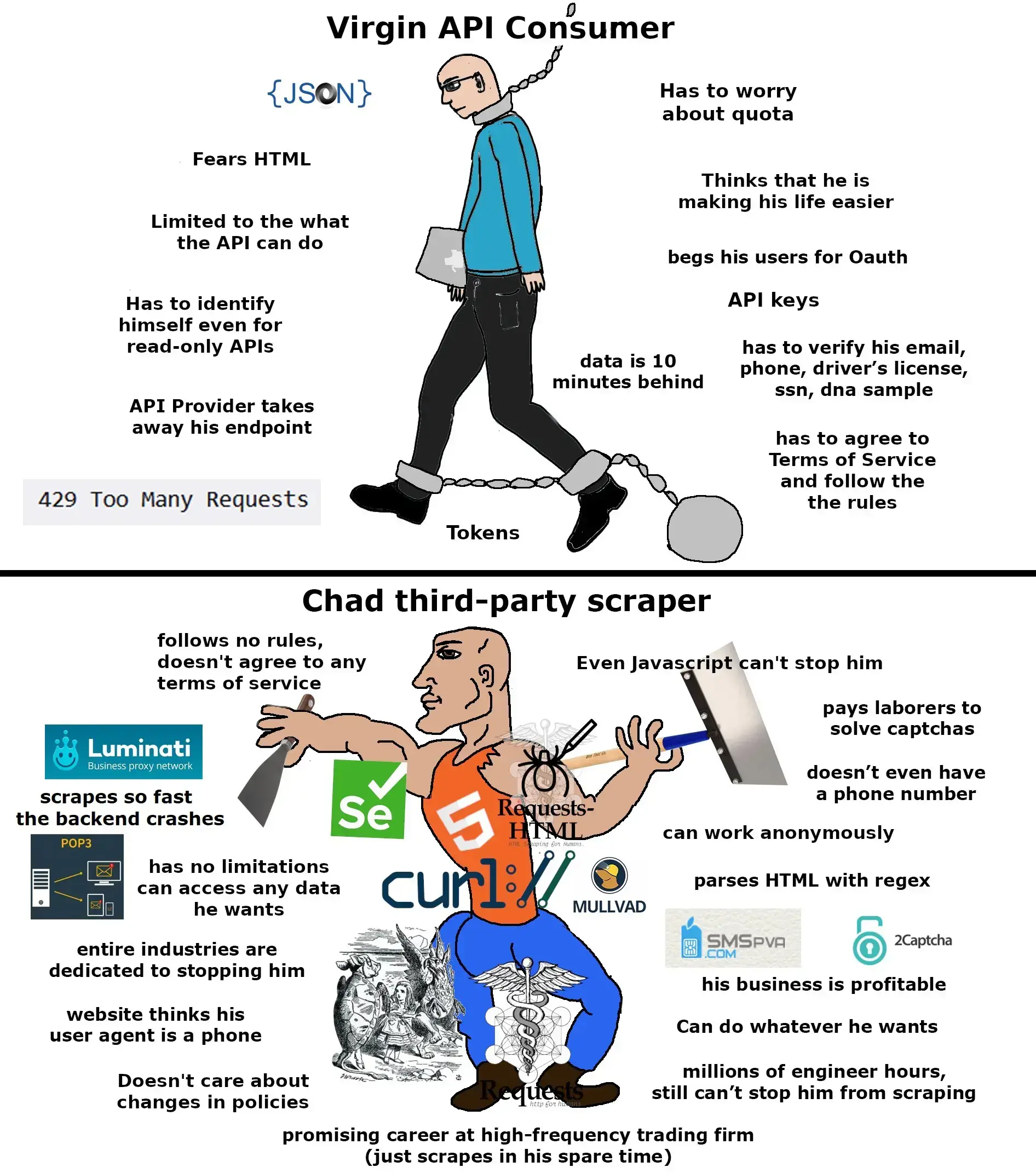

Chad scraper

Chad scraper

Chad scraper

You're viewing a single thread.

I’ve just discovered selenium and my life has changed.

I created a shitty script (with ChatGPT's help) that uses Selenium and can dump a Confluence page from work, all its subpages and all linked Google Drive documents.

How so?

When a customer needs a part replaced, they send in shipping data. This data has to be entered into 3-4 different web forms and an email. This allows me to automate it all from a single form that has built in error checking so human mistakes are limited.

Company could probably automate this all in the backend but they won’t :shrug:

Using Selenium for this is probably overkill. You might be better off sending direct HTTP requests with your form data. This way you don't actually have to spin up an entire browser to perform that simple operation for you.

That said, if it works - it works!

I'm guessing forms like this have CSRF protection, so you'd probably have to obtain that token and hope they don't make a new one on every request.

Good point. This is also possible to overcome with one additional HTTP request and some HTML parsing. Still less overhead than running Selenium! In any event, I was replying in a general sense: Selenium is easy to understand and seems like an intuitive solution to a simple problem. In 99% of cases some additional effort will result in a more efficient solution.