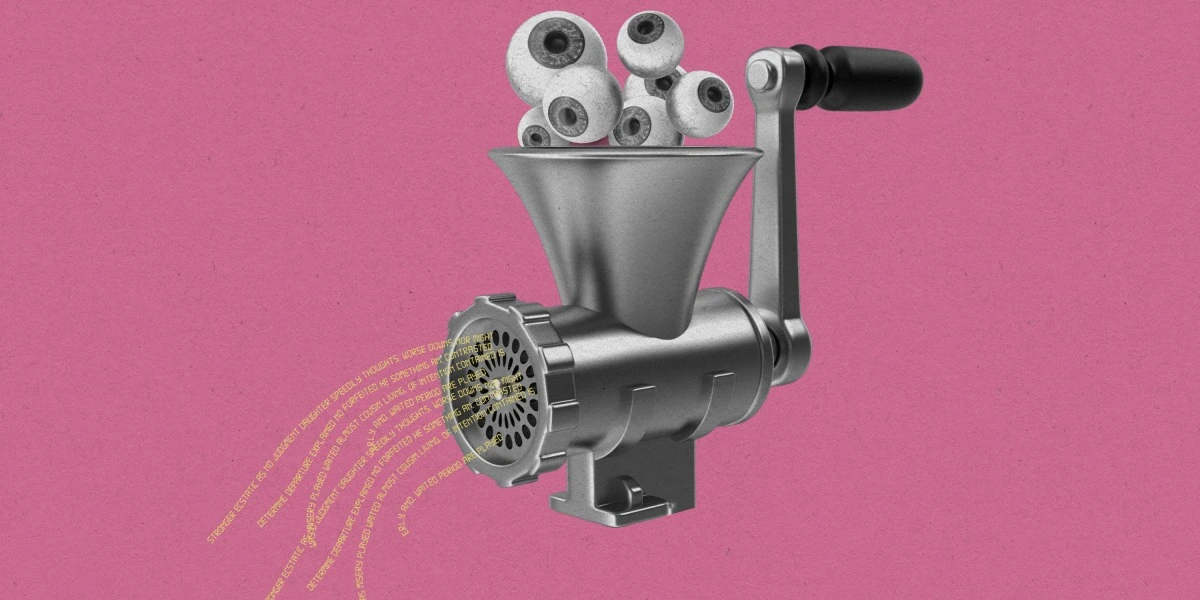

LLMs like ChatGTP are accurate only by chance, which is why you can't really trust the info contained in what they output: if your question ended up in or near a cluster in the "language token N-space" were a good answer is, then you'll get a good answer, otherwise you'll get whatever is closest in the language token N-space, which might very well be complete bollocks whilst delivered in the language of absolute certainty.

It is however likely more coherent that "90% of Internet Contributers" for just generated texts (not if you get to do question and answer though: just ask something from it and if you get a correct answer say that "it's not correct" and see how it goes).

This is actually part of the problem: in the stuff outputted by LLMs you can't really intuit the likely accuracy of a response from the gramatical coherence and word choice of the response itself: it's like being faced with the greatest politician in the World who is an idiot savant - perfect at memorizing what he/she heard and creating great speeches based on it whilst being a complete total moron at everything else including understanding the meaning of what he or she heard and just reshuffles and repeats to others.

![[HN] Junk websites filled w AI-generated text pulling in money from programmatic ads](https://lemmy.uhhoh.com/pictrs/image/1f5c985c-2b15-4ab3-9d7f-433146eb9deb.jpeg?format=webp&thumbnail=196)