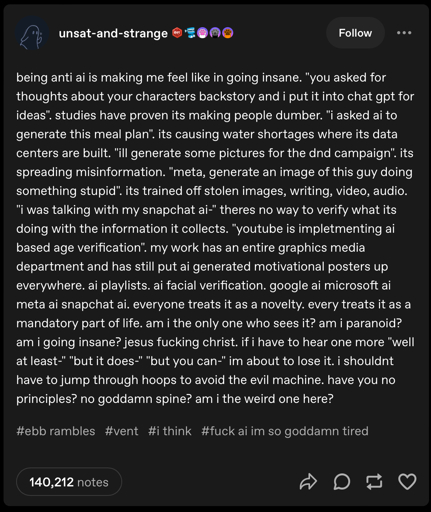

Is It Just Me?

Is It Just Me?

Is It Just Me?

You're viewing a single thread.

No, it's not just you or unsat-and-strange. You're pro-human.

Trying something new when it first comes out or when you first get access to it is novelty. What we've moved to now is mass adoption. And that's a problem.

These LLMs are automation of mass theft with a good enough regurgitation of the stolen data. This is unethical for the vast majority of business applications. And good enough is insufficient in most cases, like software.

I had a lot of fun playing around with AI when it first came out. And people figured out how to do prompts I cant seem to replicate. I don't begrudge people from trying a new thing.

But if we aren't going to regulate AI or teach people how to avoid AI induced psychosis then even in applications were it could be useful it's a danger to anyone who uses it. Not to mention how wasteful its water and energy usage is.

Regulate? This is what lead AI companies are pushing for, they would pass the bureaucracy but not the competitors.

The shit just needs to be forced to opensource. If you steal the content from entire world to build a thinking machine - give back to the world.

This would also crash the bubble and would slow down any of the most unethical for-profits.

Regulate? This is what lead AI companies are pushing for, they would pass the bureaucracy but not the competitors.

I was referring to this in my comment:

Congress decided to not go through with the AI-law moratorium. Instead they opted to do nothing, which is what AI companies would prefer states would do. Not to mention the pro-AI argument appeals to the judgement of Putin, notorious for being surrounded by yes-men and his own state propaganda. And the genocide of Ukrainians in pursuit of the conquest of Europe.

“There’s growing recognition that the current patchwork approach to regulating AI isn’t working and will continue to worsen if we stay on this path,” OpenAI’s chief global affairs officer, Chris Lehane, wrote on LinkedIn. “While not someone I’d typically quote, Vladimir Putin has said that whoever prevails will determine the direction of the world going forward.”

The shit just needs to be forced to opensource. If you steal the content from entire world to build a thinking machine - give back to the world.

The problem is unlike Robin Hood, AI stole from the people and gave to the rich. The intellectual property of artists and writers were stolen and the only way to give it back is to compensate them, which is currently unlikely to happen. Letting everyone see how the theft machine works under the hood doesn't provide compensation for the usage of that intellectual property.

This would also crash the bubble and would slow down any of the most unethical for-profits.

Not really. It would let more people get it on it. And most tech companies are already in on it. This wouldn't impose any costs on AI development. At this point the speculation is primarily on what comes next. If open source would burst the bubble it would have happened when DeepSeek was released. We're still talking about the bubble bursting in the future so that clearly didn't happen.

Forced opensourcing would totally destroy the profits, cause you spend money on research and then you opensource, so anyone can just grab your model and don't pay you a cent. Where would the profits come from?

IP of writers

I mean yes, and? AI is still shitty at creative writing. Unlike with images, it's not like people oneshot a decent book.

give it to the rich

We should push to make high-vram devices accessible. This is literally about means of production - we should fight for equal access. Regulation is the reverse of that, give those megacorps the unique ability to run it because others are too stupid to control it.

OpenAI

They were the most notorious proponents of the regulations. Lots of talks with openai devs where they just doomsay about the dangers of AGI, and how it must be top secret controlled by govs.

Forced opensourcing would totally destroy the profits, cause you spend money on research and then you opensource, so anyone can just grab your model and don’t pay you a cent.

DeepSeek was released

The profits were not destroyed.

Where would the profits come from?

At this point the speculation is primarily on what comes next.

People are betting on what they think LLMs will be able to do in the future, not what they do now.

I mean yes, and?

It's theft. They stole the work of writers and all sorts of content creators. That's the wrong that needs to be righted. Not how to reproduce the crime. The only way to right intellectual property theft is to pay the owner of that intellectual property the money they would have gotten if they had willingly leased it out as part of a deal. Corporations, like Nintendo, Disney, and Hasbro, hound people who do anything unapproved with their intellectual property. The idea that we're yes anding the intellectual property of all humanity is laughable in a discussion supposedly about ethics.

We should push to make high-vram devices accessible.

That's a whole other topic. But what we should fight for now is worker owned corporations. While that is an excellent goal, it isn't helping to undue the theft that was done on its own. It's only allowing more people to profit off that theft. We should also compensate the people who were stolen from if we care about ethics. Also, compensating writers and artists seems like a good reason to take all the money away from the billionaires.

Lots of talks with openai devs where they just doomsay about the dangers of AGI, and how it must be top secret controlled by govs.

OpenAI’s chief global affairs officer, Chris Lehane, wrote on LinkedIn

Looks like the devs aren't in control of the C-Suite. Whoops, all avoidable capitalist driven apocalypses.

it's theft

So is all the papers, all the conversations in the internet, all the code etc. So what? Nobody will stop the AI train. You would need Butlerian Jihad type of event to make it happen. In case of any won class action, the repayments would be so laughable nobody would even apply.

Deepseek

Deepseek didn't opensource any proprietary AIs corporations do. I'm talking about forcing OpenAI to opensource all of their AI type of event, or close the company if they don't comply.

betting on the future

Ok, new AI model drops, it's opensource, I download it and run on my rack. Where profits?

So what?

So appealing to ethics was bullshit got it. You just wanted the automated theft tool.

Deepseek

It kept some things hidden but it was the most open source LLM we got.

Ok, new AI model drops, it’s opensource, I download it and run on my rack. Where profits?

The next new AI model that can do the next new thing. The entire economy is based on speculative investments. If you can't improve on the AI model on your machine you're not getting any investor money. edit: typos

the bubble has burst or, rather, currently is in the process of bursting.

My job involves working directly with AI, LLM's, and companies that have leveraged their use. It didn't work. And I'd say the majority of my clients are now scrambling to recover or to simply make it out of the other end alive. Soon there's going to be nothing left to regulate.

GPT5 was a failure. Rumors I've been hearing is that Anthropics new model will be a failure much like GPT5. The house of cards is falling as we speak. This won't be the complete Death of AI but this is just like the dot com bubble. It was bound to happen. The models have nothing left to eat and they're getting desperate to find new sources. For a good while they've been quite literally eating each others feces. They're now starting on Git Repos of all things to consume. Codeberg can tell you all about that from this past week. This is why I'm telling people to consider setting up private git instances and lock that crap down. if you're on Github get your shit off there ASAP because Microsoft is beginning to feast on your repos.

But essentially the AI is starving. Companies have discovered that vibe coding and leveraging AI to build from end to end didn't work. Nothing produced scales, its all full of exploits or in most cases has zero security measures what so ever. They all sunk money into something that has yet to pay out. Just go on linkedin and see all the tech bros desperately trying to save their own asses right now.

the bubble is bursting.

The folks I know at both OpenAI and Anthropic don’t share your belief.

Also, anecdotally, I’m only seeing more and more push for LLM use at work.

that's interesting in all honesty and I don't doubt you. all I know is my bank account has been getting bigger within the past few months due to new work from clients looking to fix their AI problems.

I think you’re onto something where a lot of this AI mess is going to have to be fixed by actual engineers. If folks blindly copied from stackoverflow without any understanding, they’re gonna have a bad time and that seems equivalent to what we’re seeing here.

I think the AI hate is overblown and I tend to treat it more like a search engine than something that actually does my work for me. With how bad Google has gotten, some of these models have been a blessing.

My hope is that the models remain useful, but the bubble of treating them like a competent engineer bursts.

Agreed. I'm with you it should be treated as a basic tool not something that is used to actually create things which, again in my current line of work, is what many places have done. It's a fantastic rubber duck. I use it myself for that purpose or even for tasks that I can't be bothered with like creating README markdowns or commit messages or even setting up flakes and nix shells and stuff like that, creating base project structures so YOU can do the actual work and don't have to waste time setting things up.

The hate can be overblown but I can see where it's coming from purely because many companies have not utilized it as a tool but instead thought of it as a replacement for an individual.

At the risk of sounding like a tangent, LLMs' survival doesn't solely depend on consumer/business confidence. In the US, we are living in a fascist dictatorship. Fascism and fascists are inherently irrational. Trump, a fascist, wants to bring back coal despite the market natural phasing coal out.

The fascists want LLMs because they hate art and all things creative. So the fascists may very well choose to have the federal government invest in LLM companies. Like how they bought 10% of Intel's stock or how they want to build coal powered freedom cities.

So even if there are no business applications for LLM technology our fascist dictatorship may still try to impose LLM technology on all of us. Purely out of hate for us, art and life itself. edit: looks like I commented this under my comment the first time